Last November I was able to attend Blizzcon in Anaheim. Blizzcon is the annual convention hosted by Blizzard Entertainment (creators of Overwatch, Diablo, Starcraft, World of Warcraft, etc). In the past the focus has been solely on the games and the game developers. In the last 2-3 years there have been more panels that give more of a look “behind the curtain”. These panels have more information about design processes and engineering practices at Blizzard. There were 2 panels I went to which highlighted this – one was engineering and the other was about level design. Some points that jumped out were:

During the Q&A for the engineering panel, the engineers were asked about whether there was any sort of mandated technologies that have to be used across the business or in particular areas. The response? They used whatever technology or tools that made sense for that area of the business and its needs. The team that handles the websites end up using technologies that make sense in that area. This led into a discussion about the Blizzard’s use of APIs as the means to allow these different technology islands to talk to each other. This approach allows the best tools for the job in an area, but creates a reliance on ensuring any API changes to don’t have downstream effects. Which leads into the next topic…

There was an interesting reference to how Blizzard deal with keeping documentation up to date. With their reliance on APIs, there would most likely be a process where changes have to be tested. Part of their test model involves taking sample data and assets from documentation and run tests with it. If the documentation’s samples haven’t been updated reflect changes in functionality, the test should fail and be flagged. This approach isn’t completely foolproof, but it was an interesting approach to the issue of documentation in IT.

The level design panel blew away one major assumption I had about Blizzard’s level design process for World of Warcraft. My assumption was that the game designers would detail the game world to a fine degree. The level design people would build that without much scope for changing things. The reality was that the game designers would only outline what a particular zone or area would need (mostly in terms of quest flow or general look and feel). It was the level designers who would flesh out the world. Many of those pieces of “character” or “flavour” in the game world were due to the level designers filling those gaps with their own stories.

I’m hoping in the future, they’ll keep doing these sort of panels. One with a bit more focus on the infrastructure side of things would be cool to see.

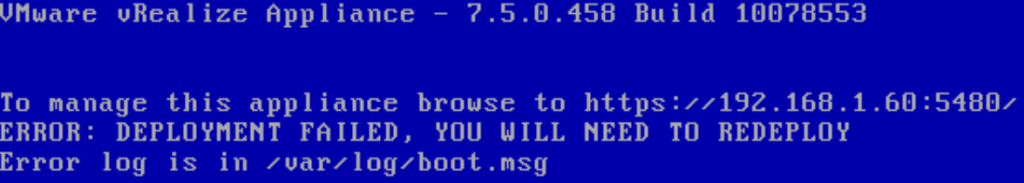

Anyone who has had to setup vRealize Automation would probably know the sinking feeling I started feeling. Having to setup vRA again from scratch was not something I really wanted to do….

Anyone who has had to setup vRealize Automation would probably know the sinking feeling I started feeling. Having to setup vRA again from scratch was not something I really wanted to do….