The recent batch of high profile security incidents at various companies in Australia highlights the need for appropriate security measures across all components of an organisation’s infrastructure. Defender for DevOps is a new functional addon (in preview) to Defender for Cloud. It provides security functionality for your code respositories and associated components.

Setup

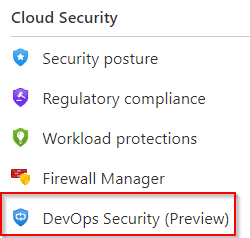

When navigating to the Defender for Cloud interface, a new option will appear under the “Cloud Security” heading.

Once we click on this, we are presented with an intro splash page with steps to getting started. The first step is to connect to the environments. Both Azure DevOps and Github repositories are supported environments.